Somebody once told me, that to live your life to the fullest, you have to chase your dreams.

This is exactly what I have been working on throughout my entire carrier, although the biggest step in this might be today.

When I was only 3 years old, it was my eldest brother that introduced me with computers. To be exact it was the Commodore 64. I will never forget this moment, especially spending hours copying games from my receiver to my disks.

Ever since that moment, computers hypnotized me, I love them, and I made it my hobby to learn everything I wanted to know about them. My goal was to do ‘tricks’ with them, do the things you weren’t supposed to do. Or to just break the software and try to fix it again (hopefully learn something additional this way).

Years had passed when I became older, and my goals had changed. Somebody asked me what I would like to become when I got older. I could not put my finger on it what I exactly wanted to do, but it had to be something with computers, I wanted to make a living out of my hobby.

Eventually I switched my education to study ICT at the Nova Collega in Hoofddorp, and after this I eventually found my first job as helpdesk employee for SNT/Wanadoo.

Shortly after this I found my next goal, the old fashioned IT we’re been working on, that had to be an better way. My new goal was to automate every action I had to do at-least 3 times in a row manually. This did not make all managers happy at the time, since automating would cost precious time where they did not see direct result, so I made this my new hobby.

Finally years later after teaching myself, Delphi, Visual Basic and Java, a new language started becoming a big player on the market: PowerShell.

It was during this period I had to do a lot of daily manual actions for Exchange at a customer, and I quickly noticed that writing a few minor scripts made my day a hell of a lot easier.

After I showed this to management they asked me to do this more often, and usually for deployments, or virtual servers.

Eventually I got in touch with automating VMware, and later on Hyper-V. I changed my goals again. I wanted to do more with virtualization, and eventually more with cloud technologies.

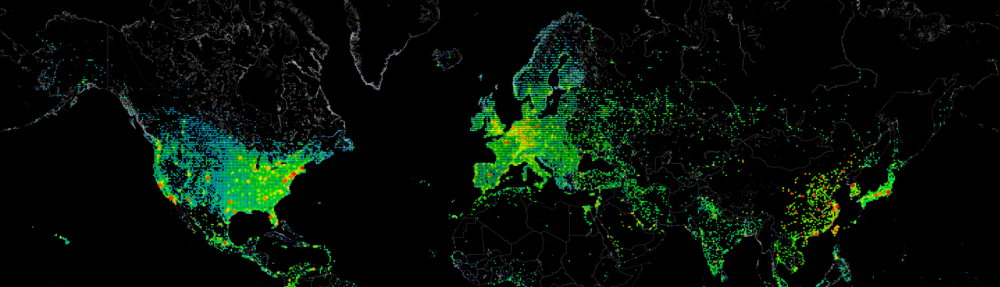

Everybody talked about the ‘Cloud’ but what did that really mean?. I did not exactly know it yet at that time but I did know I wanted to lean a lot about it, and share it with the people around me.

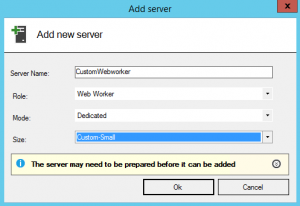

I started combining my new passion with cloud technologies with the scripting knowledge I had been working on. I began to automate deployments, write additional code to manage Hyper-V environments in an easier way, and eventually wrote scripts to deploy ‘Roles’ to servers. Because be honest, how many people want an empty server? They want to have specific applications or functions, and perhaps the most important, they wanted every machine to be exactly the same after a deployment (outside of specifications).

Again I learned quite a lot, and technologies changed big time these last few years.

This made me again review my goals. I wanted to share all this knowledge with more people. I loved talking about the new stuff I had been working on, how you could use it in your daily job, and how to simplify managing your environments.

I started blogging, giving presentations at customers, and at some events. But I also started sharing code back to the community on Git-Hub. This is where I had landed un until now, and what I am still doing on a day to day basis.

However, about a year ago a new goal started growing in me. I loved working with automation, new Microsoft cloud solutions, and sharing stuff. But I wanted to do more.

Everywhere I looked around me, when big players and sourcing companies were recruiting and delivering generic systems engineers, or generic automation engineers, nobody placed themselves on the market as ‘the experts’ for PowerShell or Cloud Automation. It became my dream to see if I could fill this gap.

At about this same time, I was placed at a customer together with my good friend and colleague Jeff Wouters. We roughly had about the same idea’s, and eventually we sat together to discuss our ideals and goals, and see if we could realise them: create a company that is fully specialised in Cloud & PowerShell automation. This is where the new Methos was born.

Since Jeff and I are both very community related, it probably won’t surprise you that we are trying to make a difference when it comes to communication between colleagues in the field.

You hire an expert? You don’t only receive the expertise of this individual, but the expertise of the whole Methos group. We believe that nobody knows everything, and you know more with many.

If there is enough contact between the colleagues, people can learn and grow with each other’s expertise’s. Next to this we will encourage people to go to community events around their own expertise’s, and will invite customers to internal master classes on different topics.

The next few years, we will be focussing us on our new dream, and to build on Methos. We will do more than our best to make this a successfully company, and The cloud and datacentre experts in the Netherlands.

Like this:

Like Loading...